Joe Fioti, Luminal’s co-founder, realized something three years ago while working on chip design at Intel. He was striving to create the best chips possible, but the true limitation resided in the software.

He told me, “Even the best hardware is useless if developers find it difficult to utilize; they simply won’t.”

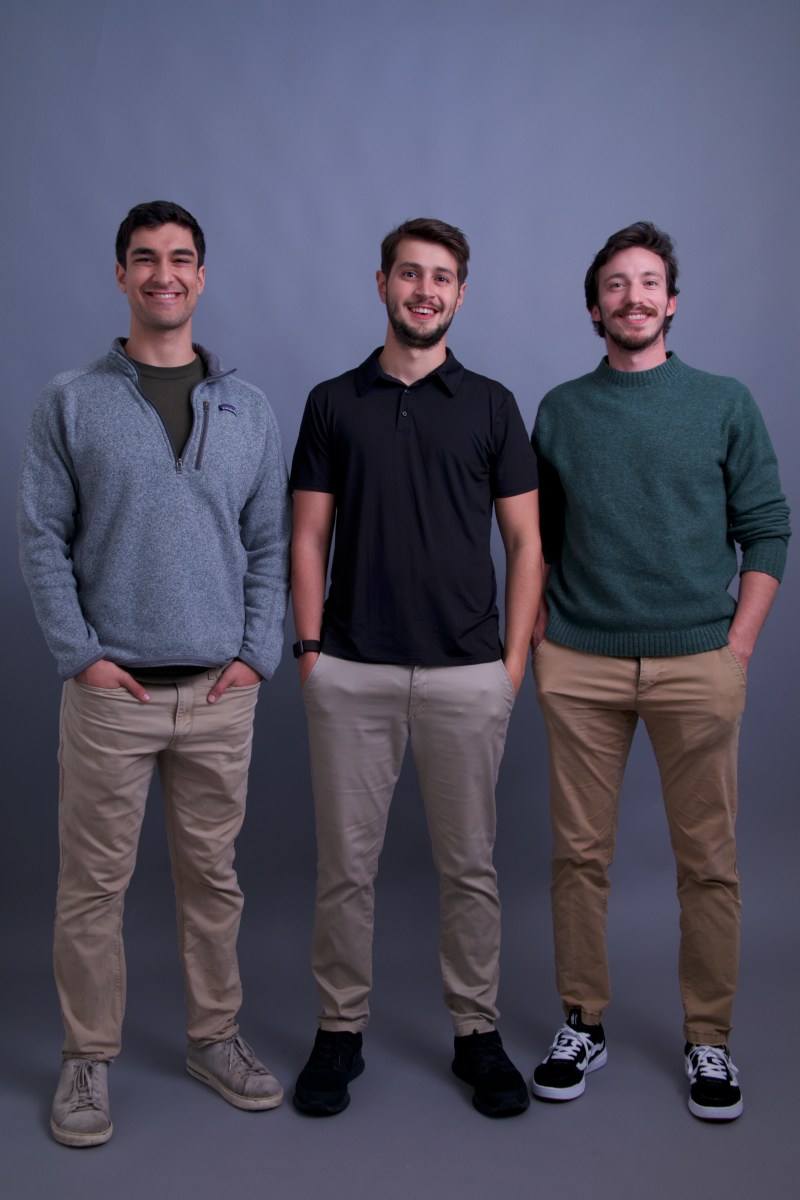

He has since launched a business dedicated to resolving this issue. Luminal announced the successful completion of a $5.3 million seed funding round on Monday. Felicis Ventures led the round, with angel investments from Paul Graham, Guillermo Rauch, and Ben Porterfield.

Jake Stevens and Matthew Gunton, Fioti’s co-founders, previously worked at Apple and Amazon, respectively. The company participated in Y Combinator’s Summer 2025 cohort.

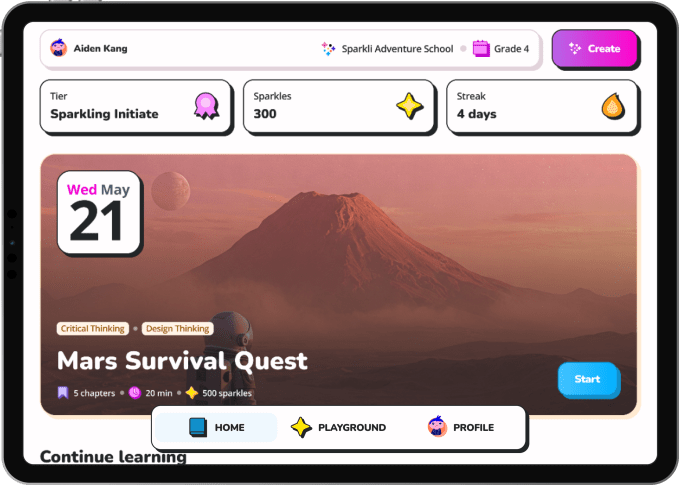

Luminal’s fundamental concept is straightforward: they offer computing resources, similar to neo-cloud providers like Coreweave or Lambda Labs. However, while those companies prioritize GPUs, Luminal concentrates on optimization strategies that enable them to extract more processing power from their infrastructure. Specifically, the company focuses on refining the compiler that bridges written code and the GPU hardware — the same developer tools that caused Fioti so much trouble in his prior role.

Currently, Nvidia’s CUDA system is the industry’s leading compiler, a crucial but sometimes overlooked factor in the company’s remarkable success. However, many components of CUDA are open-source, and Luminal anticipates that, with the ongoing GPU shortage in the industry, significant value can be created by enhancing the rest of the software stack.

This is part of a growing trend of inference-optimization startups, which have gained prominence as businesses seek more efficient and cost-effective methods for running their models. Inference providers such as Baseten and Together AI have long specialized in optimization, and emerging companies like Tensormesh and Clarifai are now appearing to concentrate on more specialized technical solutions.

Luminal and its peers will face fierce competition from optimization teams within major labs, which have the advantage of optimizing for a specific family of models. Luminal, on the other hand, must adapt to a variety of models while working for clients. However, Fioti believes the market is expanding rapidly enough to offset the risk of being outmatched by the hyperscalers.

Fioti states, “It will always be possible to manually fine-tune a model architecture on specific hardware for six months, and you’ll probably outperform any compiler. But we’re betting that the general-purpose use case, anything less than that, is still highly economically valuable.”